AI Statistics for Non-Majors

arigaram

Without a single formula or line of code, this penetrates the essence of basic statistics necessary for AI development and application.

입문

AI

What should be done when building a generative AI or LLM-based RAG (Retrieval-Augmented Generation) system, but the desired performance isn't achieved and there's no suitable solution? This lecture presents methods to improve RAG performance based on Cognitive Load theory. Through this lecture, you will understand the limitations of LLM context windows and learn how to effectively manage cognitive load in RAG systems. It is a practical-level theoretical lecture covering Chunk size and structure design, high-quality Chunk generation techniques, dynamic optimization, performance evaluation, and practical techniques.

18 learners

Level Intermediate

Course period Unlimited

Strategies to understand and manage LLM context window and token limitations

How to create high-quality Chunks and integrate them into a RAG pipeline

The course is currently being completed. Please note that you may have to wait a long time until the course is fully finished (though I will add content regularly). Please consider this when making your purchase decision.

September 4, 2025

I've uploaded about 2/3 of the integrated summaries for each section. I'll upload the remaining integrated summaries one by one soon.

I separated Section 3 into Section 3 and Section 4, but this caused a mismatch between the section numbers in the course list and the class materials, which could lead to confusion, so I moved Section 4 to Section 31 (at the very end).

September 1, 2025

We have separated Section 3 into Section 3 and Section 4. As a result, the section numbers and lesson material numbers may not match. We will update the lesson materials and re-record the videos before posting them again. Thank you for your patience.

I'm reorganizing the table of contents to reduce confusion for students. Accordingly, I have made the classes that were temporarily set to private on August 22nd public again.

August 22, 2025

I have changed the lessons in the [Advanced] course (Sections 11-30) that are not yet completed to private status. I plan to make them public by section or by lesson as they are completed. This is a measure to reduce confusion for students, and I would appreciate your understanding.

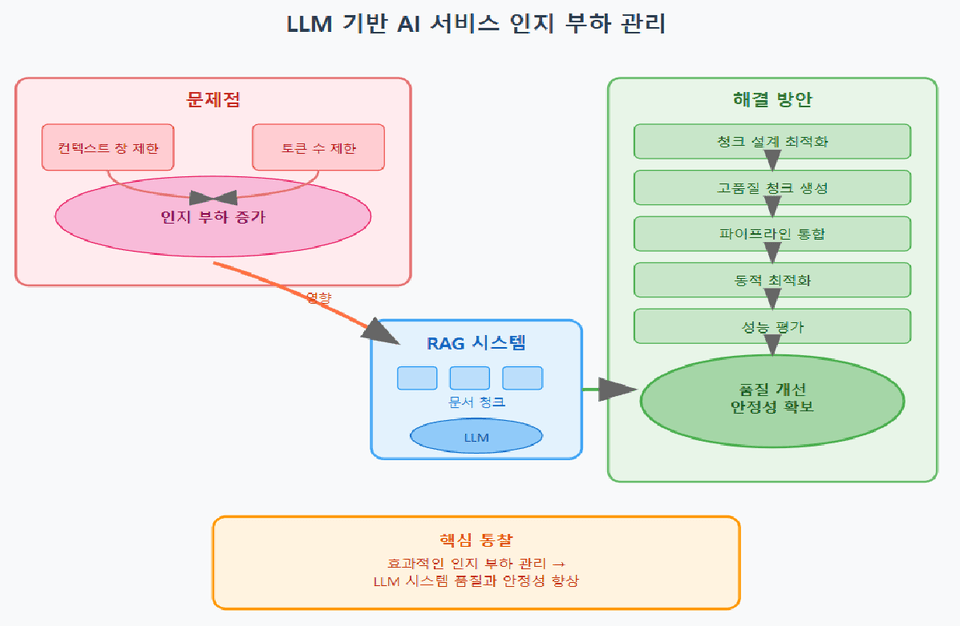

Large Language Model (LLM)-based artificial intelligence services have become mainstream, but they face limitations due to restricted context window sizes and token counts. Particularly in RAG (Retrieval-Augmented Generation) systems, failure to properly manage documents or chunks (fragments of documents) can create cognitive load on the LLM side, making it difficult to generate optimal responses.

Cognitive load refers to the degree of difficulty in perceiving information based on the amount and complexity of information that a system (including the human brain and artificial intelligence) must process. When cognitive load increases in LLM systems, information can accumulate excessively, obscuring the core message, degrading performance, and failing to produce responses at the expected level. Therefore, effective cognitive load management is a key factor that determines the quality and stability of LLM-based systems.

This course presents a step-by-step methodology that can be immediately applied in practice, from chunk design to high-quality chunk generation, RAG pipeline integration, dynamic optimization, and performance evaluation, based on the concepts of LLM's context window limitations and cognitive load. Through this, we expect to significantly resolve the response quality degradation issues that could not be solved with various existing RAG enhancement techniques.

LLM Context Window and Cognitive Load Management Strategies Based on Token Limits

# Methods for Generating High-Quality Chunks and Utilizing Various Chunking Techniques

The technology that integrates data preprocessing, retrieval, prompt design, and post-processing to build a RAG system

Dynamic Optimization through Real-time Chunk Size Adjustment and Summary Parameter Control

Performance Evaluation Metrics Application and Results Report Writing Guidelines

Artificial Intelligence (AI), ChatGPT, LLM, RAG, AI Utilization (AX)

The first section clearly establishes the overall outline and objectives of this course, covering the fundamental concepts of LLM context windows and cognitive load management. In particular, you'll gain a detailed understanding of what cognitive load is, why it's important in LLM environments, and learn the basics of RAG. Based on theory, we'll touch on the core topics covered in the course, helping you establish a learning direction. Concepts are explained step by step so that even beginners can easily follow along, laying a solid foundation for naturally progressing to advanced topics later on.

This section provides an in-depth analysis of LLM context windows and tokenization mechanisms. It examines in detail what tokens are, how they are segmented, and how they affect model input, explaining with various examples how context window size limitations impact model performance. Additionally, you'll learn how to calculate costs based on tokens, developing practical insights that can be applied when designing real-world systems. Through this process, you'll gain a systematic understanding of tokens and context, enabling you to intuitively grasp the specific challenges of cognitive load management.

Effective chunk design is the key to RAG system quality. This section introduces various chunking strategies ranging from fixed-size chunks to paragraph-based, semantic unit clustering, and hierarchical structures, and deeply covers the advantages, disadvantages, and application cases of each method. Based on an understanding of how chunk size and structure affect cognitive load and context utilization, you can acquire practical know-how for designing optimal chunking strategies suited to different situations. Finally, through hands-on practice, you will gain experience applying various chunking methods, organically connecting theory and practice.

This section covers more advanced techniques for creating chunks that are well-suited to reducing cognitive load and improving information quality. You'll learn various technologies such as smart summarization, merging original text with summaries, embedding-based clustering, meta-tagging, and reflecting query intent, and practice how to combine each technique to create more efficient chunks. Through this, you'll develop the capability to generate high-quality chunks that go beyond simple chunking methods by reflecting the meaning of information and even the questioner's intent. This is a core strategy for helping LLMs deliver optimal answers even when dealing with complex documents.

This section comprehensively covers RAG system design and integration. It systematically addresses the entire RAG pipeline process, from preprocessing, similarity search and filtering, chunk reconstruction and prompt design, answer generation and post-processing, to hallucination detection and re-injection strategies. Through hands-on practice, you'll learn techniques for each stage that minimize cognitive load while focusing on accurate answer generation. The section emphasizes practical skills and problem-solving methods that can be immediately applied in real-world environments.

This section covers methods for dynamically adjusting context load and chunk size according to the situation. It provides an in-depth introduction to automation and optimization strategies for intelligent system operation, ranging from question complexity assessment, dynamic chunk size adjustment algorithms, adaptive summarization parameter tuning, context accumulation management in multi-turn conversations, to system monitoring and feedback loop design. Through this, you will acquire real-time management capabilities that can maximize LLM performance while responding to changing requirements and complexity levels.

This covers various metrics and evaluation methodologies for objectively assessing the effectiveness of RAG systems and chunking strategies. You'll learn how to derive improvement points through multifaceted measurement of system performance, from recall, accuracy, response latency, cost analysis, token usage efficiency, and user satisfaction to strategy validation through A/B testing. It provides insights for continuous performance tuning and advancement based on evaluation results, strengthening data-driven decision-making capabilities.

This discusses research challenges and future expansion possibilities in the fields of RAG and LLM cognitive load management. It covers fully automated chunk optimization, long-term memory integration issues, scenarios for building large-scale multimedia document-based RAG systems, and methods for extending RAG systems to process multimodal information. Through the latest research trends and practical application cases, it provides a clear understanding of future development directions and challenges ahead.

This section explains how to conduct a comprehensive project that integrates the theories and techniques learned so far to design, implement, tune, and evaluate an actual RAG system. By proceeding step-by-step through all stages—from selecting a project topic to data collection and preprocessing, designing chunk strategies, integrating the RAG system, evaluating performance and writing result reports, and conducting final presentations and code reviews—you can validate your practical capabilities. Based on what you learn here, developers will be able to form teams or work individually to carry out practical projects, and through this process, they will be able to fully internalize the content learned in this course.

Understanding the concept of cognitive load, you will clearly grasp LLM context windows and token limits, and acquire strategies to manage them.

Various chunking techniques and chunk optimization methods can efficiently divide and summarize information to maximize LLM performance.

You will practice the entire RAG pipeline process and develop the ability to build and tune actual systems.

Through dynamic optimization and performance evaluation, you will learn how to provide stable and high-performance AI services in real-time operational environments.

Understand the development trends of AI systems and strengthen future readiness through the latest research tasks and expansion directions.

Who is this course right for?

Developer directly designing or operating LLM and RAG systems

AI Engineer optimizing large-volume document and multi-turn dialogue processing.

Need to know before starting?

Understanding Basic Concepts of Natural Language Processing (NLP)

Understanding the Basic Working Principles of Large Language Models (LLMs)

Concepts of Tokenization and Context Window

Basic programming skills (Python language recommended)

(Optional) Experience utilizing AI and machine learning models or conducting related projects

612

Learners

31

Reviews

2

Answers

4.5

Rating

18

Courses

IT가 취미이자 직업인 사람입니다.

다양한 저술, 번역, 자문, 개발, 강의 경력이 있습니다.

All

326 lectures ∙ (50hr 15min)

Course Materials:

$254.10

Check out other courses by the instructor!

Explore other courses in the same field!