(For Product Managers) Understanding the Basics of LLM and Planning LLM-based Services

arigaram

$77.00

Beginner / NLP, gpt, AI, ChatGPT, LLM

3.9

(7)

Explains why LLMs are needed, their technical background, and basic concepts.

Beginner

NLP, gpt, AI

Starting from the origins of natural language processing technology, this provides a detailed explanation of the various language models developed in the journey leading up to the latest LLM models.

18 learners are taking this course

Level Beginner

Course period Unlimited

Recommend Course to grow and earn commission!

Marketing Partners

Recommend Course to grow and earn commission!

The Development Process of Language Models and the Principles of Each Language Model

The Origins of NLP

# The Structure and Principles of Transformers

The Structure and Principles of RNN and LSTM

The Principles of Attention Mechanism

The course is currently being completed. Please note that you may have to wait a while until the course is fully finished (though I will be adding content regularly). Please consider this when making your purchase decision.

December 10, 2025

I've added a large number of new lessons and have released the table of contents for now. They are marked as [2nd Edition].

I have marked the existing classes as [Edition 1]. I plan to revise the existing classes. When they are updated with the revised content, the class titles will be marked as [Edition 2].

This course provides a comprehensive learning journey through the evolution of language models, from early natural language processing research to modern large language models (LLMs). You will systematically understand the technological transformations that span from the rule-based era through statistical language models, neural network-based models, and the transformer revolution, leading to today's multimodal, efficiency-focused, and application-oriented LLMs.

Understanding the overall evolution of how language models have developed.

Understand the characteristics of key models from each era (RNN, LSTM, Transformer, BERT, GPT, etc.).

I organize the latest LLM technologies and research trends in a structured manner.

Understand LLM efficiency techniques and their practical applications.

Critically examining the direction and limitations of future LLM research.

The course consists of a total of 6 sections, with each section organized around chronological developments and research axes.

Section 1: The Origins and Early Development of NLP

Section 2: Language Model Research Before Transformers

Section 3: The Transformer Revolution and Large Language Models

Section 4: Latest LLM Technologies and Research Trends

Section 5: LLM Efficiency Techniques and Model Optimization

Section 6: LLM Applications, System Integration, and Future Outlook

In this section, you will learn from the starting point of NLP to the foundation of early language models.

NLP addresses what problems and how it began

How rule-based systems were structured and why they hit their limits

How statistical language models (n-gram LM) emerged

The emergence and significance of early large-scale corpora such as the Brown Corpus and Penn Treebank

# The Concept of Distributional Hypothesis and Its Application in NLP The distributional hypothesis is a fundamental concept in natural language processing (NLP) that states words appearing in similar contexts tend to have similar meanings. This hypothesis forms the theoretical foundation for modern word embedding techniques and plays a crucial role in enabling computers to understand the meaning of language. ## Core Concept The distributional hypothesis is based on a simple yet powerful idea: "You shall know a word by the company it keeps." This means that the meaning of a word can be inferred from the words that frequently appear around it. For example, words like "dog" and "cat" often appear in similar contexts (

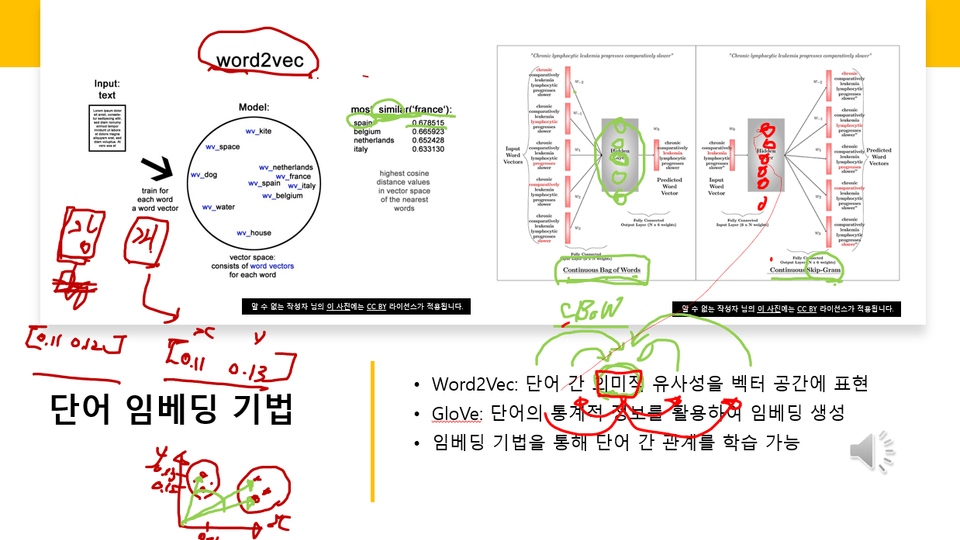

Word2Vec, GloVe, and Other Early Word Embedding Technologies: Their Birth and Contributions

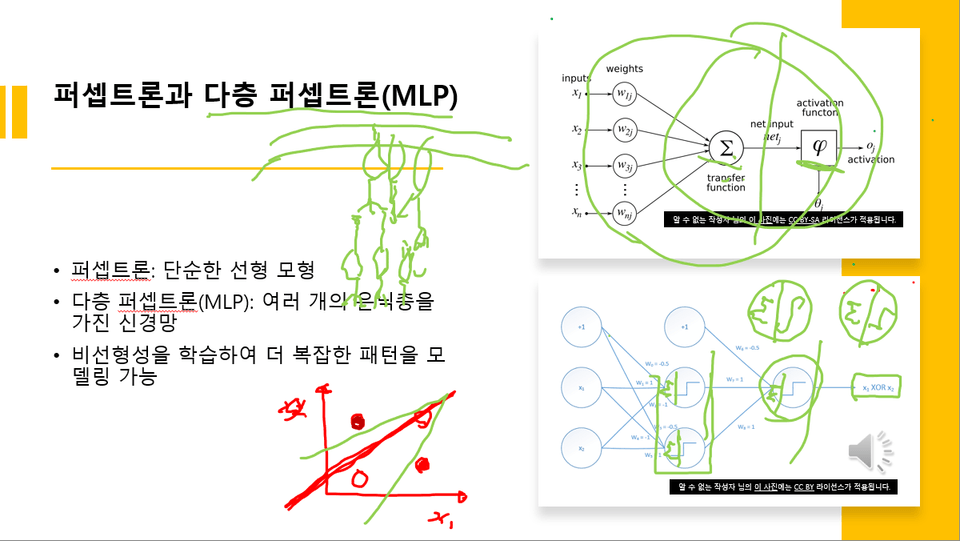

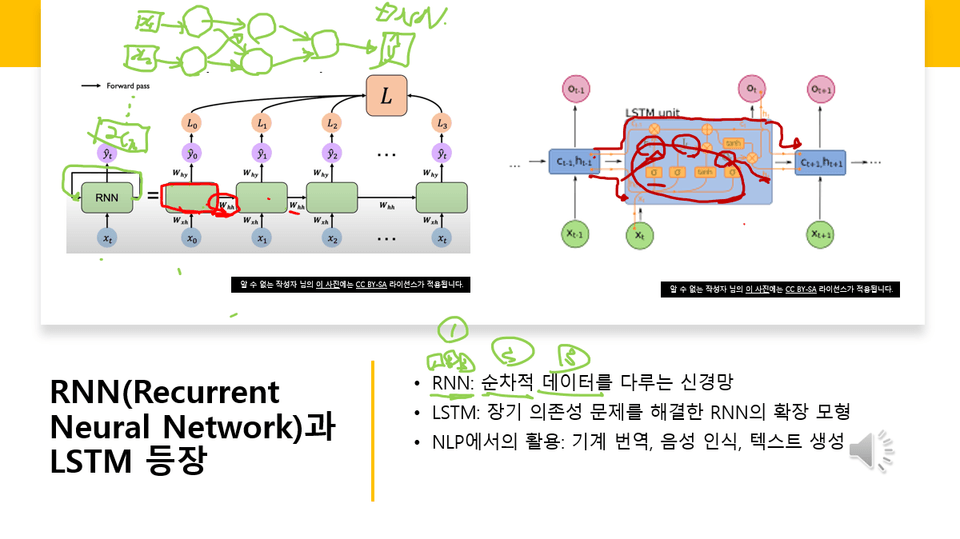

In this section, we cover how RNN-based models transformed NLP and the technical limitations before transformers.

# The Background and Structural Principles of RNN, LSTM, and GRU

The Essence of the Long-term Dependency Problem

How the Seq2Seq Architecture Led to Innovation in Machine Translation

The Reason for the Emergence of the Attention Mechanism and Its Effects

CNN-based language models fall under a different research category, so not certain, but learning the main ideas

Understanding the research landscape before Transformers and the need for next-generation models

In this section, you'll learn how the modern LLM era centered on transformers began.

The structure and characteristics of Transformers, exemplified by "Attention Is All You Need"

The Background of Pretraining and Language Understanding Models

# BERT Model's Bidirectionality Concept and MLM (Masked LM) Technique

The main development flow of the GPT series (GPT–GPT-4)

The standardization of the "pre-training → fine-tuning" learning paradigm

The Meaning of Scaling Laws and Changes in LLM Training Strategies

This section covers not only the structure, characteristics, and training methods of the latest LLMs, but also human feedback-based models.

Common Characteristics of Modern LLMs like GPT-4, Llama, and Claude

The Background of Open Source LLMs (e.g., Llama·Mistral)

RLHF, DPO, Instruction Tuning and other user-customized learning technologies

Structure and Use Cases of Multimodal Models

Research on Bias, Hallucination, Safety, and Ethical Considerations

This section focuses on techniques for making large-scale models lighter and faster.

Quantization, Pruning, Knowledge Distillation

LoRA·Prefix Tuning and other PEFT (Parameter-Efficient Fine-Tuning) methods

FlashAttention and other high-speed Attention algorithms

Inference Cost Reduction Techniques

The Concept and Technical Challenges of On-Device LLMs

Efficiency Case Studies in Real Service Implementation

This section teaches how LLMs are actually utilized in real systems and services,

and summarizes future directions while acknowledging some uncertainties.

# Structure and Advantages of Retrieval-Augmented Generation (RAG)

The principles of tool-based LLMs such as Toolformer and ReAct

Medical, legal, and coding domain-specific LLMs

Expansion of Multimodal Models such as GPT-4V

# LLM-Based Autonomous Systems Research (Partially "Uncertain")

The future outlook and points of debate regarding LLMs (e.g., AGI possibility → "uncertain")

As shown in the example screen below, various diagrams are used during the lecture to explain concepts related to LLMs in detail. In particularwe provide intensive explanations using diagrams related to NLP, RNN, self-attention, transformer, and LLM.

Class 3 Screen Example 1

Screen Example 2 Explained in Lesson 3

Lecture Title

Class 3 Screen Example 3

Learners interested in artificial intelligence and data science

Developers and researchers who want to systematically understand NLP or LLM technology

Anyone who wants to understand the latest trends in artificial intelligence technology

Basic Machine Learning Concepts

Experience with using simple Python-based models (recommended)

You can gain a deep understanding of the overall history of language model development.

You can build foundational knowledge to analyze and utilize the latest LLM technologies and trends.

You can design problem-solving approaches, service designs, and research directions utilizing LLMs.

Since this is a theory-focused lecture, no separate practice environment is required.

I understand you want to attach lecture materials in PDF format. However, I don't see any PDF file attached to your message yet. Please attach the PDF file you'd like me to translate, and I'll translate its content from Korean to English following the translation guidelines provided. To attach a file, you can: 1. Use the attachment/upload button in your interface 2. Drag and drop the PDF file into the chat 3. Copy and paste the text content if the PDF is text-based Once you share the file, I

The History and Development of LLMs: From the Origins of Language Models to the Latest Technologies

Who is this course right for?

Those who want to know about the origin and development process of LLMs and technology trends

Those who want to understand the artificial neural network architecture that forms the foundation of LLMs

Those who want to build theoretical knowledge for developing LLMs directly

626

Learners

31

Reviews

2

Answers

4.5

Rating

18

Courses

I am someone for whom IT is both a hobby and a profession.

I have a diverse background in writing, translation, consulting, development, and lecturing.

All

72 lectures ∙ (10hr 39min)

Course Materials:

Check out other courses by the instructor!

Explore other courses in the same field!

$77.00