I majored in mechanical engineering, but since graduation, I have been reading and writing code. I am a Google AI/Cloud GDE and a Microsoft AI MVP. I run the TensorFlow blog (tensorflow.blog), and I enjoy exploring the boundary between software and science while writing and translating books on machine learning and deep learning.

He has authored "Deep Learning by Building Alone" (Hanbit Media, 2025), "Machine Learning + Deep Learning Alone (Revised Edition)" (Hanbit Media, 2025), "Data Analysis with Python Alone" (Hanbit Media, 2023), "The Art of Conversing with ChatGPT" (Hanbit Media, 2023), and "Do it! Introduction to Deep Learning" (EasysPublishing, 2019).

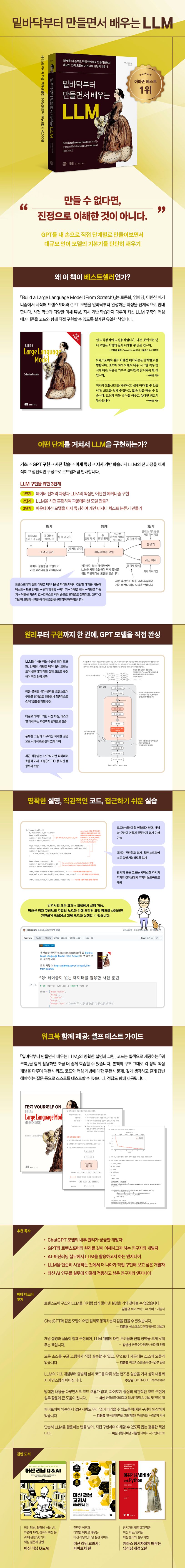

He has translated dozens of books into Korean, including "Large Language Models, Just the Essentials!" (Insight, 2025), "Machine Learning, Just the Essentials!" (Insight, 2025), "Build a Large Language Model (From Scratch)" (Gilbut, 2025), "Hands-On Large Language Models" (Hanbit Media, 2025), "Machine Learning Q & AI" (Gilbut, 2025), "Math for Developers" (Hanbit Media, 2024), "Machine Learning Solutions with Python for Real-World Applications" (Hanbit Media, 2024), "Machine Learning with PyTorch and Scikit-Learn" (Gilbut, 2023), "What Is ChatGPT Doing... and Why Does It Work?" by Stephen Wolfram (Hanbit Media, 2023), "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow, 3rd Edition" (Hanbit Media, 2023), "Generative Deep Learning, 2nd Edition" (Hanbit Media, 2023), "Python for Awakening Your Coding Brain" (Hanbit Media, 2023), "Natural Language Processing with Transformers" (Hanbit Media, 2022), "Deep Learning with Python, 2nd Edition" (Gilbut, 2022), "Machine Learning & Deep Learning for Developers" (Hanbit Media, 2022), "Gradient Boosting with XGBoost and Scikit-Learn" (Hanbit Media, 2022), "Deep Learning with TensorFlow.js from Google Brain Team" (Gilbut, 2022), and "Introduction to Machine Learning with Python, 2nd Edition" (Hanbit Media, 2022).

![[Complete NLP Mastery I] The Birth of Attention: Understanding NLP from RNN·Seq2Seq Limitations to Implementing AttentionCourse Thumbnail](https://cdn.inflearn.com/public/files/courses/339625/cover/01ka39v05h3st4hcttnfhfwvhd?w=420)