After graduating with my PhD, I had the opportunity to study and teach computer vision for about five years, which led me to

Up until now, I have been focusing my studies on bridging the gap between my mathematics major and engineering theories.

Areas of Expertise (Fields of Study)

Major: Mathematics (Topological Geometry), Minor: Computer Science

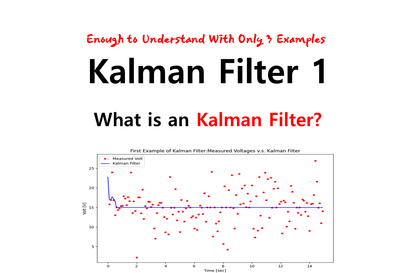

Current) 3D Computer Vision (3D Reconstruction), Kalman Filter, Lie-group (SO(3)),

Researcher in Stochastic Differential Equations

Current) YouTube Channel Host: Jang-hwan Lim: 3D Computer Vision

Current) Facebook Spatial AI KR Group (Mathematics Advisory Committee Member)

Education

PhD in Natural Sciences, University of Kiel, Germany (Major in Topological Geometry & Lie-group, Minor in Computer Science)

Bachelor's and Master's (Topology major) in Mathematics, Chung-Ang University

Experience

Former) CTO of Doobivision, a subsidiary of Daesung Group

Former Research Professor at Chung-Ang University Graduate School of Advanced Imaging (3D Computer Vision Research)

Books:

Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524

Link

YouTube: https://www.youtube.com/@3dcomputervision

Blog: https://blog.naver.com/jang_hwan_im

er Vision Research) Author of: Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524 Link YouTube: https://www.youtube.com/@3dcomputervision Blog: https://blog.naver.com/jang_hwan_im

er Vision Research) Author of: Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524 Link YouTube: https://www.youtube.com/@3dcomputervision Blog: https://blog.naver.com/jang_hwan_im

er Vision Research) Author: Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524 Link YouTube: https://www.youtube.com/@3dcomputervision Blog: https://blog.naver.com/jang_hwan_im

er Vision Research) Author of: Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524 Link YouTube: https://www.youtube.com/@3dcomputervision Blog: https://blog.naver.com/jang_hwan_im

er Vision Research) Author of: Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524 Link YouTube: https://www.youtube.com/@3dcomputervision Blog: https://blog.naver.com/jang_hwan_im

er Vision Research) Author of: Optimization Theory: https://product.kyobobook.co.kr/detail/S000200518524 Link YouTube: https://www.youtube.com/@3dcomputervision Blog: https://blog.naver.com/jang_hwan_im