High-Quality AI Agent Context Engineering

AISchool

$59.40

Intermediate / AI Agent, LangGraph, AI, Generative AI, openAI API

3.5

(2)

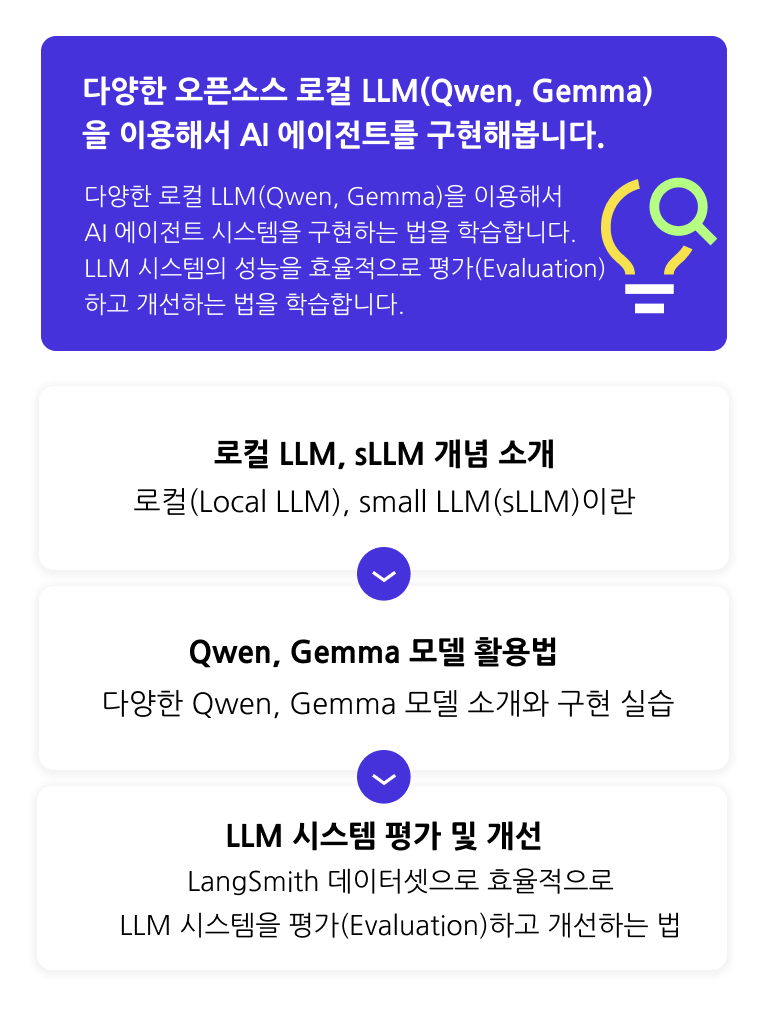

Learn context engineering techniques for creating high-quality AI agents through hands-on practice.

Intermediate

AI Agent, LangGraph, AI

![[LLM 101] Llama SFT Tutorial for LLM Beginners (feat. ChatApp Poc)Course Thumbnail](https://cdn.inflearn.com/public/files/courses/333429/cover/01k09qxkkwjcrqe886az6rr63t?w=420)

![Just 1 hour! Creating 'My Own AI Senior Developer' to install on my computer (Antigravity Vibe Coding) [Source code provided]Course Thumbnail](https://cdn.inflearn.com/public/files/courses/340332/cover/ai/3/e87ee52b-1099-42db-a384-64ab8c725470.png?w=420)

![Creating a Second Salary with AI Design in 20 Minutes a Day [Image Edition]Course Thumbnail](https://cdn.inflearn.com/public/files/courses/337366/cover/01jzq6s1wdsd0c35ksbam6rbna?w=420)