![[VLM101] Building a Multimodal Chatbot with Fine-tuning (feat.MCP / RunPod)강의 썸네일](https://cdn.inflearn.com/public/files/courses/337551/cover/01jzjdkw9evbt245h3w2mdfs2r?w=420)

[VLM101] Building a Multimodal Chatbot with Fine-tuning (feat.MCP / RunPod)

dreamingbumblebee

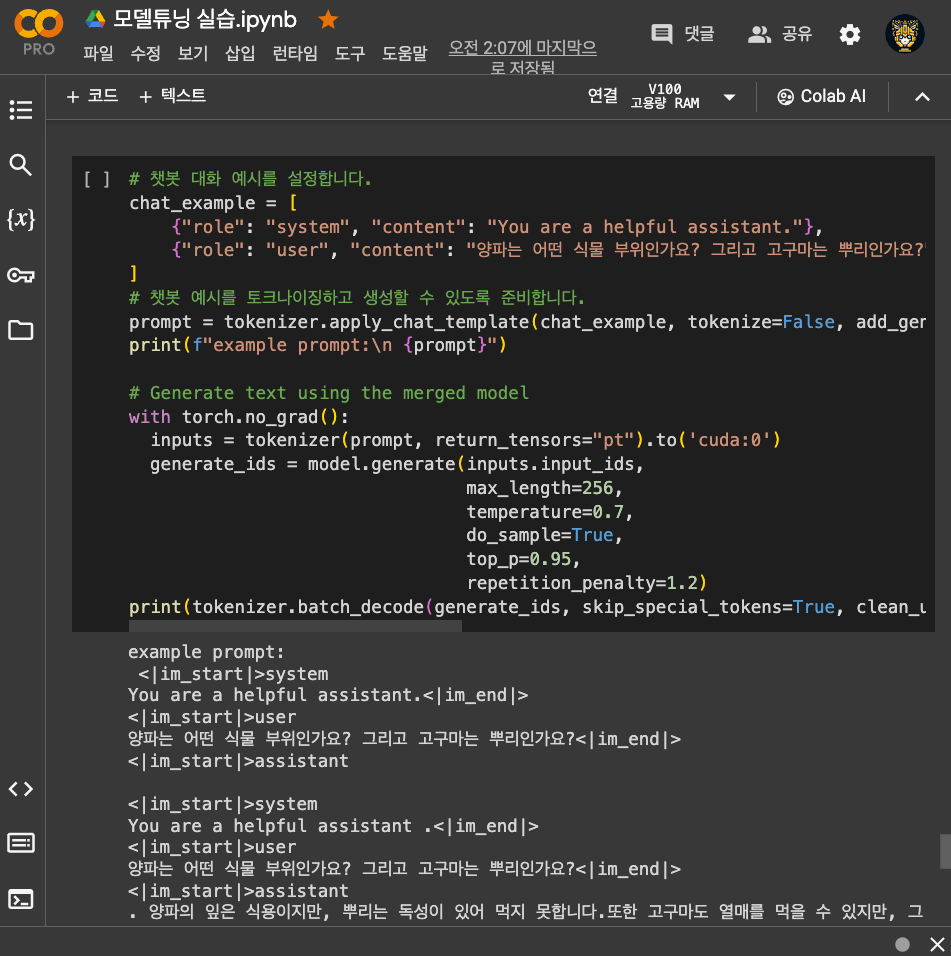

This is an introductory course for understanding the concept and application methods of Vision-Language Models (VLM), and practicing running the LLaVA model in an Ollama-based environment while integrating it with MCP (Model Context Protocol). This course covers the principles of multimodal models, quantization, service development, and integrated demo development, providing a balanced mix of theory and hands-on practice.

초급

Vision Transformer, transformer, Llama

![[Practical AIoT] Perfect Preparation for Smart Mirror Makerthon: LLM, CV, and Hardware Design강의 썸네일](https://cdn.inflearn.com/public/files/courses/340196/cover/01kexgfr26whtfsmsqd2dj1x7x?w=420)