![[VLM101] 파인튜닝으로 멀티모달 챗봇 만들기 (feat.MCP / RunPod)강의 썸네일](https://cdn.inflearn.com/public/files/courses/337551/cover/01jzjdkw9evbt245h3w2mdfs2r?w=420)

[VLM101] 파인튜닝으로 멀티모달 챗봇 만들기 (feat.MCP / RunPod)

꿈꾸는범블비

₩77,000

초급 / Vision Transformer, transformer, Llama, MCP

4.6

(18)

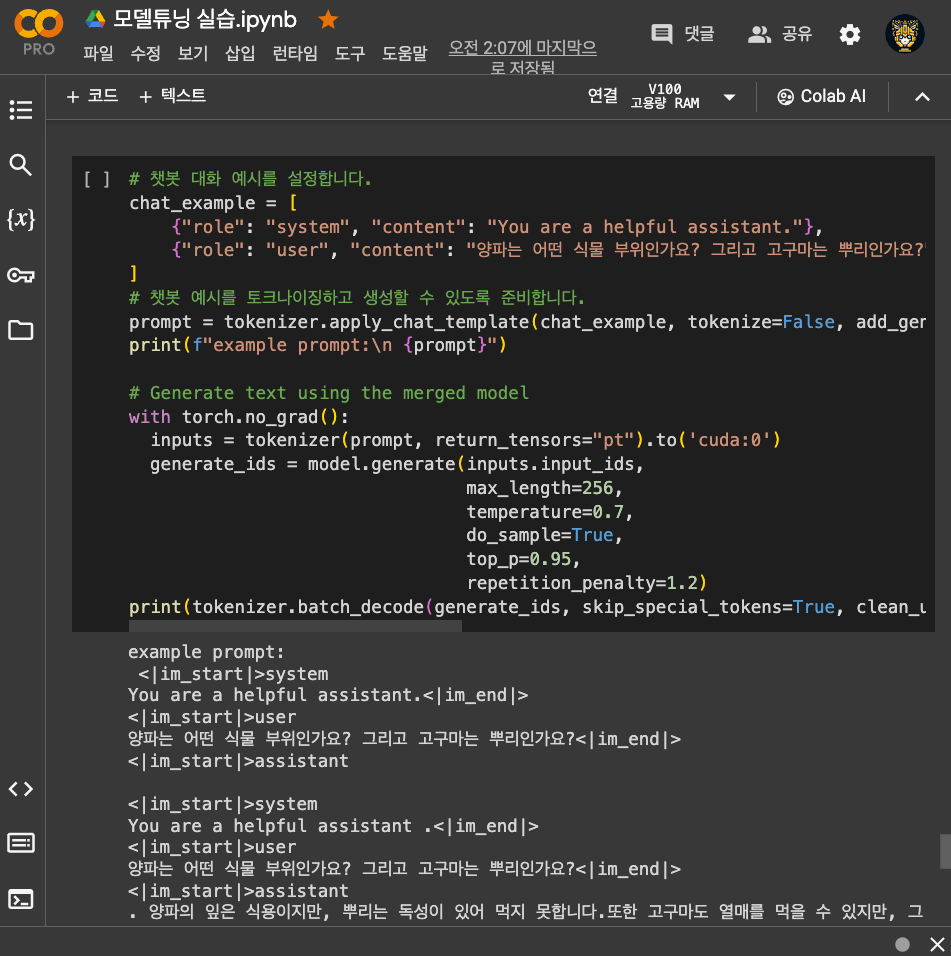

비전-언어 모델(Vision-Language Model, VLM)의 개념과 활용 방법을 이해하고, 실제로 LLaVA 모델을 Ollama 기반 환경에서 실행하며 MCP(Model Context Protocol)와 연동하는 과정을 실습하는 입문자용 강의입니다. 이 강의는 멀티모달 모델의 원리, 경량화(Quantization), 서비스 및 통합 데모 개발까지 다루며, 이론과 실습을 균형 있게 제공합니다.

초급

Vision Transformer, transformer, Llama

![[Python 초보] Flutter로 만드는 ChatGPT 음성번역앱강의 썸네일](https://cdn.inflearn.com/public/courses/333668/cover/94589a80-ab05-4bd1-ad98-1e42b75a47fc/333668.png?w=420)

![[실전 AIoT] 스마트미러 메이커톤 완벽 대비: LLM, CV, 하드웨어 설계까지강의 썸네일](https://cdn.inflearn.com/public/files/courses/340196/cover/01kexgfr26whtfsmsqd2dj1x7x?w=420)

![딱 1시간! 내 컴퓨터에 심는 '나만의 AI 사수' 만들기 (Antigravity 바이브코딩) [소스코드 제공]강의 썸네일](https://cdn.inflearn.com/public/files/courses/340332/cover/ai/3/e87ee52b-1099-42db-a384-64ab8c725470.png?w=420)

![[Sionic MCP 시리즈 1] Model Context Protocol 을 이용하여 IntelliJ 와 코딩해보자!강의 썸네일](https://cdn.inflearn.com/public/files/courses/336732/cover/01jqn0fg6k2x8bn0qfarc42pjw?w=420)

![[AI 치트키]업무 순삭의 비밀, Agentic AI강의 썸네일](https://cdn.inflearn.com/public/files/courses/340717/cover/ai/1/3b5cb844-25b5-4576-8224-d293d0989376.png?w=420)