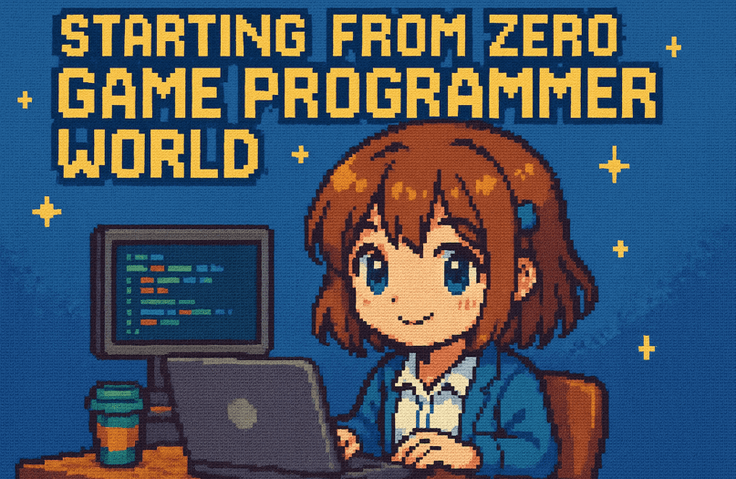

Instructor Profile & Career

Currently working as a game programmer at a major game company (Company N).

2025 KAKAO AI_TOP_100 Finalist (Top 100)

Interests: Systems / Game Development / Programming / De

Instructor Profile & Career

Currently working as a game programmer at a major game company (Company N).

2025 KAKAO AI_TOP_100 Finalist (Top 100)

Interests: Systems / Game Development / Programming / De

Instructor Profile & Career

Currently working as a game programmer at a major game company (N-Company).

2025 KAKAO AI_TOP_100 Finalist (Top 100)

Interests: Systems / Game Development / Programming / De

Instructor Introduction

Experience

I am currently working as a game programmer at a major game company, Company N.

Selected as one of the Top 100 Finalists for 2025 KAKAO AI_TOP_100

Interests

I am highly interested in systems, game development, programming, DevOps, drawing, subculture, and AI.

Words I want to say

I believe that subculture and AI can change the world.

I believe that the seeds of true growth are found in mistakes and failures.

Repository & Social Platforms

GitHub: https://github.com/kojeomstudio

Docker Hub: https://hub.docker.com/repos

I believe that the seeds of true growth are found in mistakes and failures.

Repositories & Social Platforms

What I want to say: I believe that subculture and AI can change the world. I believe that the seeds of true growth are found in mistakes and failures. Repositories & Social Platforms

![[Hi,AI!] Perfect Your Resume & Cover Letter with AI All-Purpose Prompts for Job SeekersHình thu nhỏ khóa học](https://cdn.inflearn.com/public/files/courses/339883/cover/01kbpceh0bvaj9437v9mafswyf?w=420)

![[Interview Speech] Speak confidently in your interview and pass with flying colors!Hình thu nhỏ khóa học](https://cdn.inflearn.com/public/courses/328102/cover/327500b6-3b5b-4b1e-9146-e97a776f9d85/328102-eng.png?w=420)

![[Backend/Exception Handling Scenario/Aggregation Optimization] Backend Portfolio and Practical Experience Enhancement Strategy. All-in-One PART1Hình thu nhỏ khóa học](https://cdn.inflearn.com/public/courses/335091/cover/1a19a4de-ec2e-4e26-a84e-28691e777020/335091.jpg?w=420)

![Career Roadmap for Designers and PMs [Practical Worksheets Provided]Hình thu nhỏ khóa học](https://cdn.inflearn.com/public/courses/333702/cover/665205c9-c947-4682-ac84-886a4c5ee0fe/333702.jpg?w=420)