미션 #4. PV/PVC, Deployment.. 실습과제

8개월 전

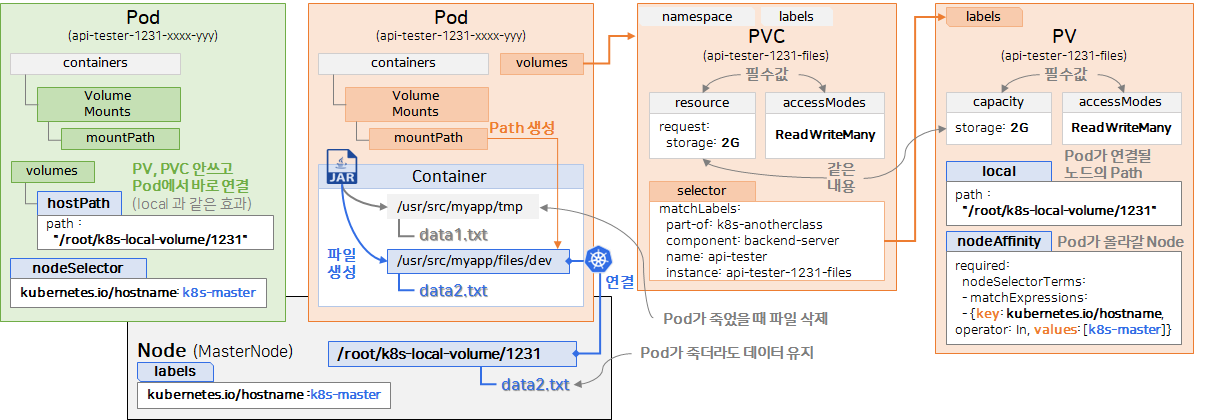

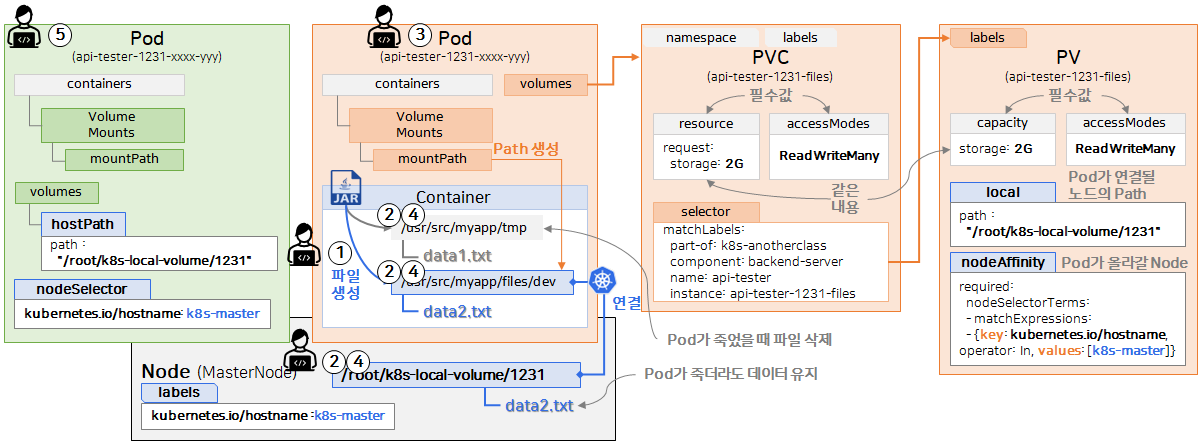

- 1번 API - 파일 생성

http://192.168.56.30:31231/create-file-pod

http://192.168.56.30:31231/create-file-pv

- 2번 - Container 임시 폴더 확인

[root@k8s-master ~]# kubectl exec -n anotherclass-123 -it api-tester-1231-755676484f-26gmv -- ls /usr/src/myapp/tmp

qlifdymaky.txt

- 2번 - Container 영구저장 폴더 확인

[root@k8s-master ~]# kubectl exec -n anotherclass-123 -it api-tester-1231-755676484f-26gmv -- ls /usr/src/myapp/files/dev

ckxjytizlh.txt

- 2번 - master node 폴더 확인

[root@k8s-master ~]# kubectl exec -n anotherclass-123 -it api-tester-1231-755676484f-p9qqt -- ls /usr/src/myapp/files/dev

ckxjytizlh.txt

[root@k8s-master ~]# kubectl exec -n anotherclass-123 -it api-tester-1231-755676484f-p9qqt -- ls /usr/src/myapp/tmp

ls: cannot access '/usr/src/myapp/tmp': No such file or directory

command terminated with exit code 2

- 2번 - master node 폴더 확인

[root@k8s-master ~]# ls /root/k8s-local-volume/1231

ckxjytizlh.txt

- 3번 - Pod 삭제

[root@k8s-master ~]# kubectl delete -n anotherclass-123 pod api-tester-1231-755676484f-26gmv

pod "api-tester-1231-755676484f-26gmv" deleted

- 4번 API - 파일 조회

[root@k8s-master ~]# curl http://192.168.56.30:31231/list-file-pod

[root@k8s-master ~]# curl http://192.168.56.30:31231/list-file-pv

ckxjytizlh.txt

hostPath 동작 확인 - Deployment 수정 후 [1~4] 실행

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: anotherclass-123

name: api-tester-1231

spec:

template:

spec:

nodeSelector:

kubernetes.io/hostname: k8s-master

containers:

- name: api-tester-1231

volumeMounts:

- name: files

mountPath: /usr/src/myapp/files/dev

- name: secret-datasource

mountPath: /usr/src/myapp/datasource

volumes:

- name: files

persistentVolumeClaim: // 삭제

claimName: api-tester-1231-files // 삭제

// 아래 hostPath 추가

hostPath:

path: /root/k8s-local-volume/1231

- name: secret-datasource

secret:

secretName: api-tester-1231-postgresql

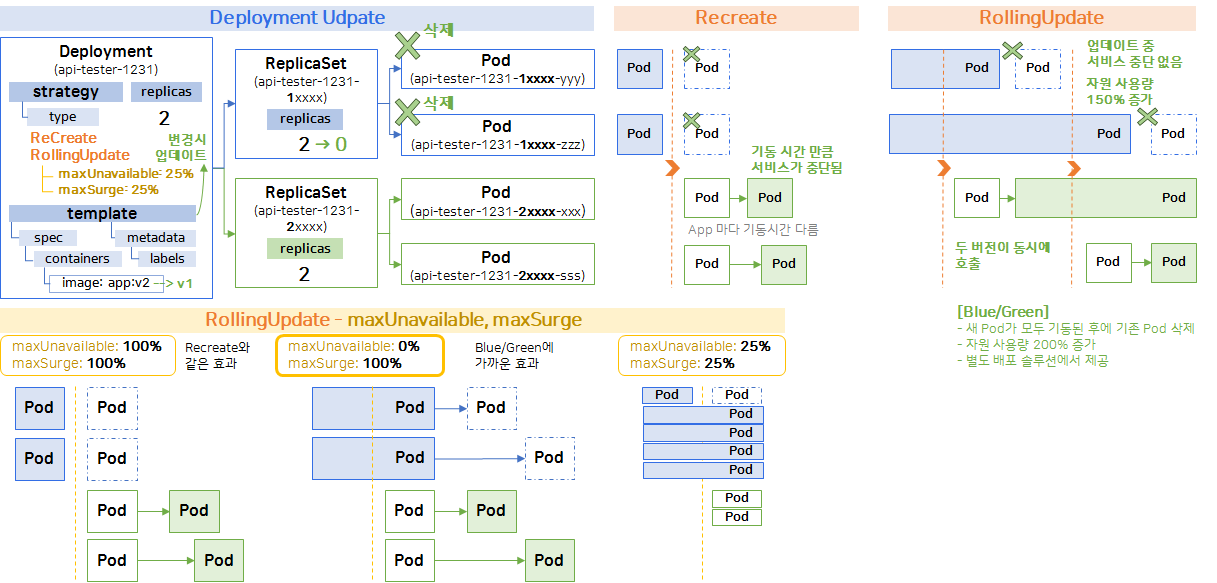

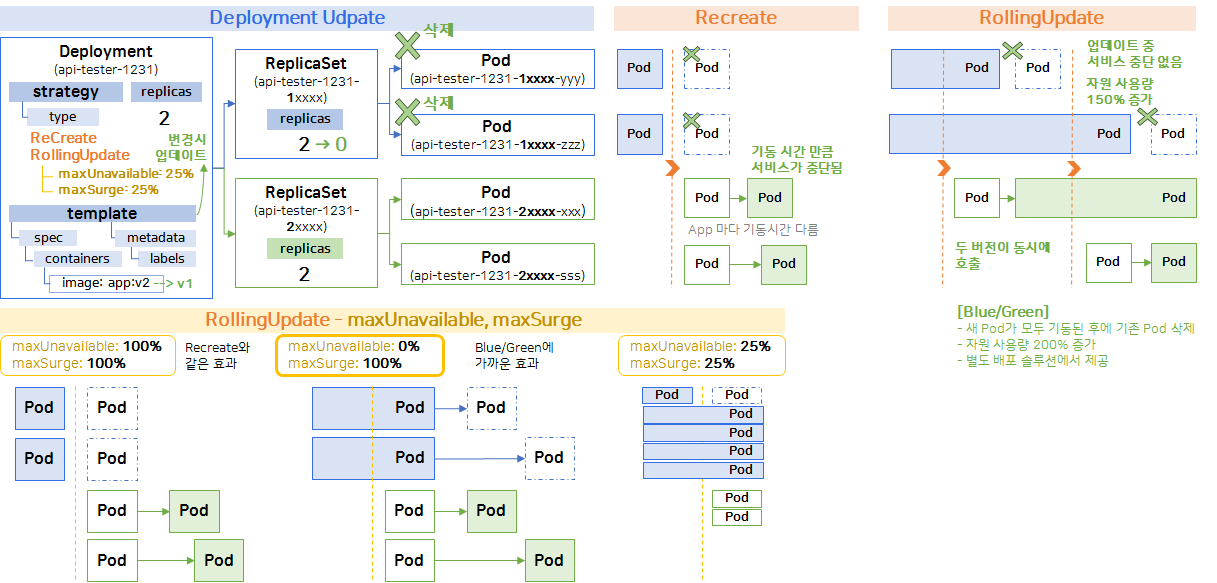

2. Deployment - search

2-1. update

2-2. 동작 확인

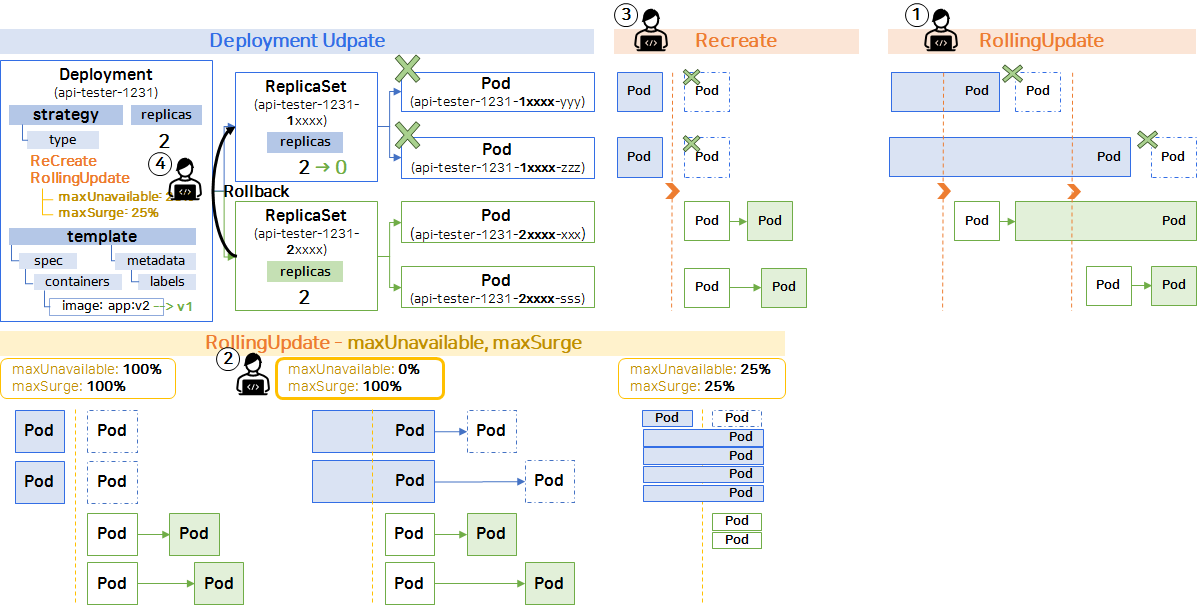

1. RollingUpdate 하기

// 1) HPA minReplica 2로 바꾸기 (이전 강의에서 minReplicas를 1로 바꿔놨었음)

kubectl patch -n anotherclass-123 hpa api-tester-1231-default -p '{"spec":{"minReplicas":2}}'

-> horizontalpodautoscaler.autoscaling/api-tester-1231-default patched

// 1) 그외 Deployment scale 명령

kubectl scale -n anotherclass-123 deployment api-tester-1231 --replicas=2

-> deployment.apps/api-tester-1231 scaled

// 1) edit로 모드로 직접 수정

kubectl edit -n anotherclass-123 deployment api-tester-1231

// 2) 지속적으로 Version호출 하기 (업데이트 동안 리턴값 관찰)

while true; do curl http://192.168.56.30:31231/version; sleep 2; echo ''; done;

-> [App Version] : Api Tester v1.0.0

-> [App Version] : Api Tester v1.0.0

-> [App Version] : Api Tester v1.0.0

// 3) 별도의 원격 콘솔창을 열어서 업데이트 실행

kubectl set image -n anotherclass-123 deployment/api-tester-1231 api-tester-1231=1pro/api-tester:v2.0.0

-> deployment.apps/api-tester-1231 image updated

kubectl set image -n anotherclass-123 deployment/api-tester-1231

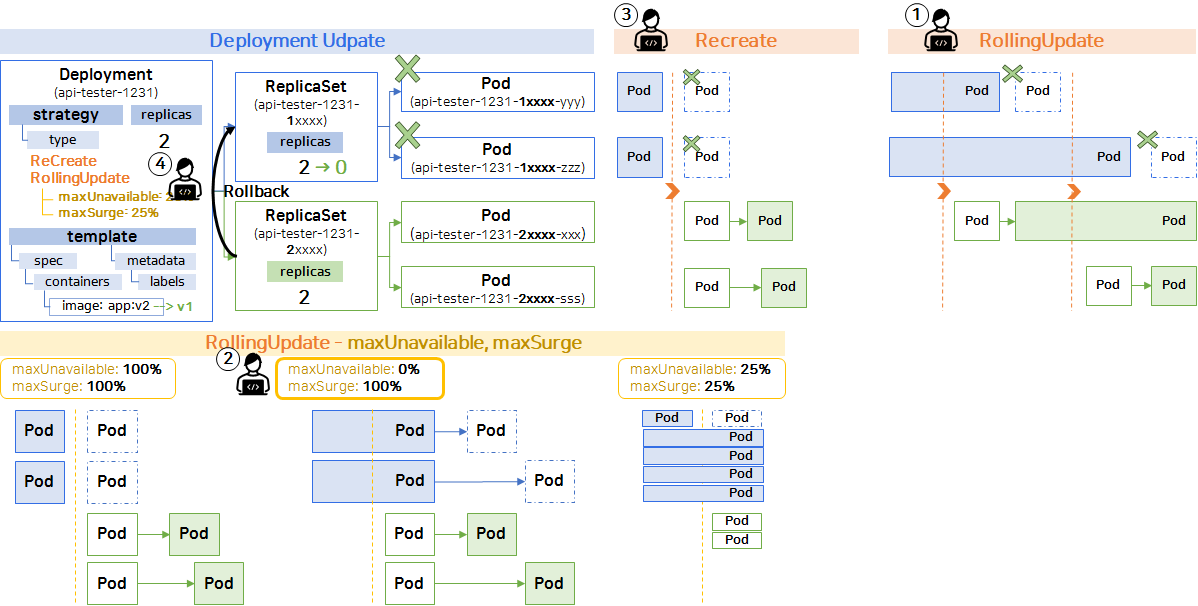

2. RollingUpdate (maxUnavailable: 0%, maxSurge: 100%) 하기

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: anotherclass-123

name: api-tester-1231

spec:

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25% -> 0% # 수정

maxSurge: 25% -> 100% # 수정kubectl set image -n anotherclass-123 deployment/api-tester-1231 api-tester-1231=1pro/api-tester:v1.0.03. Recreate 하기

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: anotherclass-123

name: api-tester-1231

spec:

replicas: 2

strategy:

type: RollingUpdate -> Recreate # 수정

rollingUpdate: # 삭제

maxUnavailable: 0% # 삭제

maxSurge: 100% # 삭제// 이전 버전으로 롤백

kubectl rollout undo -n anotherclass-123 deployment/api-tester-1231

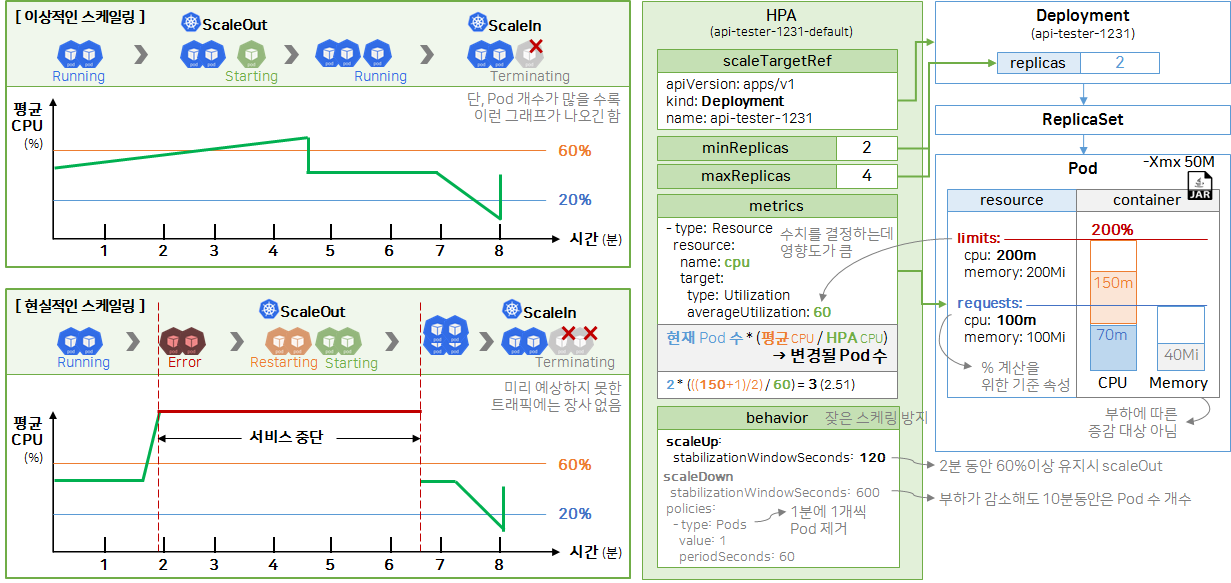

1. 부하 발생

1. 부하 발생

http://192.168.56.30:31231/cpu-load?min=3 // 3분 동안 부하 발생

http://192.168.56.30:31231/cpu-load?min=3&thread=5 // 3분 동안 5개의 쓰레드로 80% 부하 발생 // default : min=2, thread=10

부하 확인

// 실시간 업데이트는 명령어로 확인하는 게 빨라요

kubectl top -n anotherclass-123 pods

kubectl get hpa -n anotherclass-123

->

[root@k8s-master ~]# kubectl get hpa -n anotherclass-123

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

api-tester-1231-default Deployment/api-tester-1231 86%/60% 2 4 2 3d5h

// Grafana는 Prometheus를 거쳐 오기 때문에 좀 늦습니다

Grafana > Home > Dashboards > [Default] Kubernetes / Compute Resources / Pod

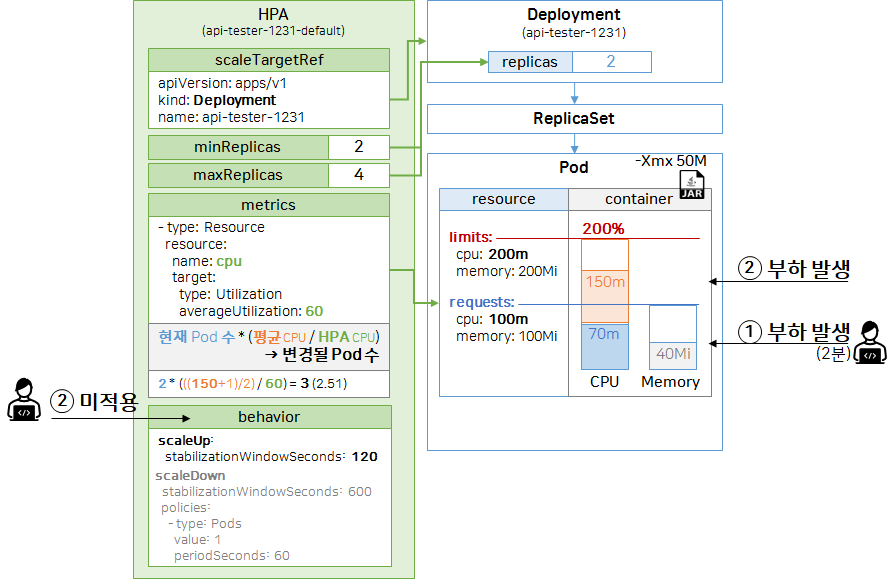

// 1. hpa 삭제

kubectl delete -n anotherclass-123 hpa api-tester-1231-default

// 2. deployment replicas 2로 변경

kubectl scale -n anotherclass-123 deployment api-tester-1231 --replicas=2

// 3. hpa 다시 생성

kubectl apply -f - <<EOF

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

namespace: anotherclass-123

name: api-tester-1231-default

labels:

part-of: k8s-anotherclass

component: backend-server

name: api-tester

instance: api-tester-1231

version: 1.0.0

managed-by: dashboard

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: api-tester-1231

minReplicas: 2

maxReplicas: 4

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 60

behavior:

scaleUp:

stabilizationWindowSeconds: 120

EOF

kubectl edit -n anotherclass-123 hpa api-tester-1231-default

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

namespace: anotherclass-123

name: api-tester-1231-default

spec:

behavior: # 삭제

scaleUp: # 삭제

stabilizationWindowSeconds: 120 # 삭제

http://192.168.56.30:31231/cpu-load

// 2분 동안 10개의 쓰레드로 80% 부하 발생

// default : min=2, thread=10

[root@k8s-master ~]# kubectl get hpa -n anotherclass-123

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

api-tester-1231-default Deployment/api-tester-1231 138%/60% 2 4 3 3d5h

[root@k8s-master ~]# kubectl get hpa -n anotherclass-123

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

api-tester-1231-default Deployment/api-tester-1231 2%/60% 2 4 3 3d5h댓글을 작성해보세요.